Supercomputer Mass Tape Migration

Tape Ark is an expert at migrating large volumes of data from active supercomputers and high-performance computing environments and has performed the largest migration of tape-based data ever completed in history.

Due to rapid changes in processing technology, quantum computing, and high-performance computing (HPC), supercomputer facilities are looking to migrate their data to new platforms to take advantage of this new technology. However, the facilities tend to be anchored to data created in the old system and often held in massive multi-petabyte or even exabyte collections that are wrapped up in proprietary formats.

Being tethered to these legacy tape collections can slow or even prevent companies from being able to take advantage of the latest technology. At Tape Ark, we believe some of the most important discoveries of our time will be made using historical content combined with new technology to derive profound results. The results may be in the form of higher resolution output, deeper learning or better access to artificial intelligence (AI) and machine learning (ML), at new scales.

Tape Ark has the background to move multi-hundred petabyte or exabyte scale data collections to and from old and new environments – even when these environments are still live and in active tape libraries and robotic silos.

Tape Ark can migrate to and from a variety of inputs and outputs and tape formats. Whether it is tape to tape, tape to disk, tape to cloud or tape to virtual tape library (VTL) on disk or cloud, we have you covered.

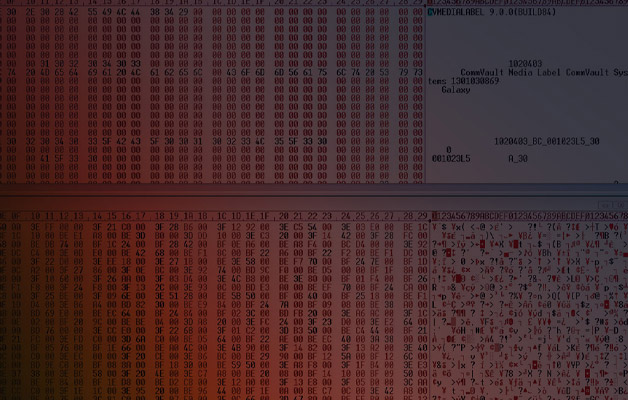

We have completed projects that require bespoke decoding of data formats, duplicate detection on massive scales, and file renaming and mapping measured in the billions of files. See the complete list of data formats, tape formats and robotic tape libraries that we work with. Don’t see the format you are interested in, reach out.

Supercomputing facilities can handle a wide variety of data formats that help support a range of scientific and engineering applications. Some of the common data formats found in these facilities include:

Scientific Data Formats

- NetCDF (Network Common Data Form): Commonly used for array-oriented scientific data, especially in climate and weather models.

- HDF5 (Hierarchical Data Format version 5): Used for storing large amounts of numerical data and supporting complex data models, common in astronomy, physics, and environmental science.

- CDF (Common Data Format): Used in space science and related fields.

- FITS (Flexible Image Transport System): Standard format in astronomy for storing, transmitting, and analyzing scientific datasets, especially images.